AI agents are appearing in GTM systems at a time when many organizations are carrying more operational load than expected. Teams are managing legacy workflows, uneven data, and systems that need more upkeep than planned. Leaders want relief, but they also want confidence that nothing breaks downstream. This creates a familiar tension: teams feel ready to shift more work to an agent, executives want clarity on risk, and admins want a model they can actually maintain.

Agentforce sits at the heart of this tension. It takes on defined operational responsibility while giving teams a controlled way to observe behaviour. To understand how far that can go, it helps to start with a clear definition of what an agent is.

What an Agent Actually Is

From a systems perspective, an agent is not just automation with a conversational interface. Even a year ago, the idea of an assistive “bot” reflected this level of work, and is actually what we started building with clients. Now, the power of Agentforce allows AI agents to behave more like a process owner that can make decisions, perform actions across objects or systems, interpret outcomes, and adjust its behaviour based on what it learns.

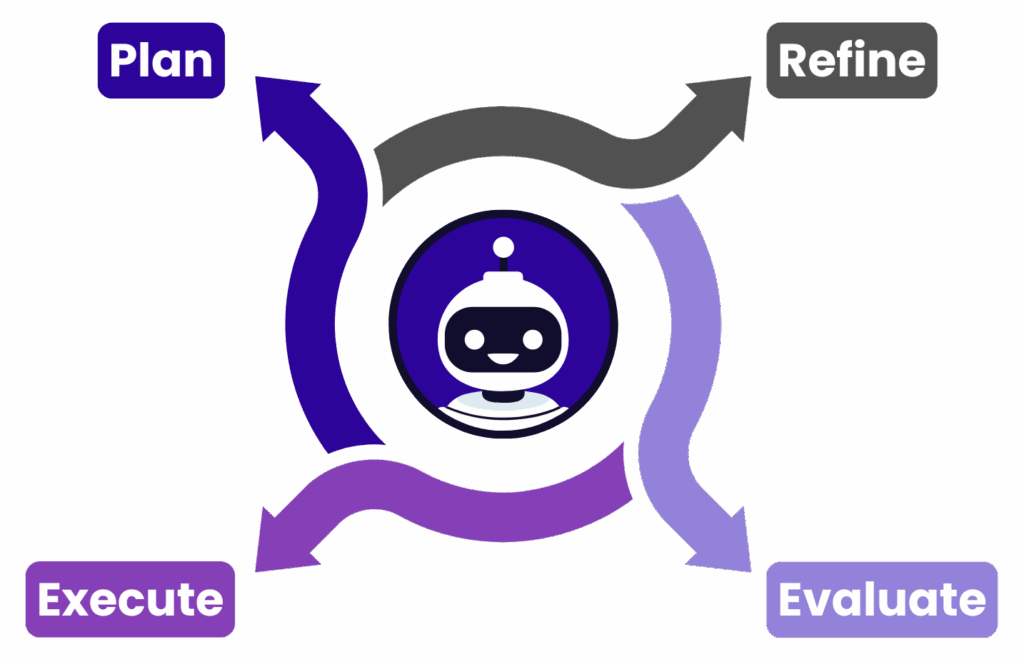

Every functional agent runs through a simple loop: Plan → Execute → Evaluate → Refine

This loop doesn’t represent the task the agent will eventually automate, but is rather reflecting the work people still need to do behind the scenes to help that agent improve. Admins define the process, lay out what “success” looks like, test early responses, and fine-tune the boundaries as the digital agent learns.

Behind the curtain, Agentforce is doing its own internal checks. It reviews its first pass, asks itself whether it followed instructions correctly, and tries again if it didn’t. Eventually, part of this doesn’t have to be reconfigured time-and-time again, with the intention that this gets built into the system.

What’s important is this: agents aren’t just grabbing data and spitting out answers anymore. They’re following a structured, multi-step process that mirrors the kind of thoughtful work a human operator would do. Now let’s take a deeper look into what growth with AI looks like.

The AI Maturity Curve

Teams often place themselves higher on the maturity curve than their systems actually support. The curve shows how teams move from structured automation to meaningful ownership.

Level 0: Rules-Based Automation

Classic workflow rules, process builder remnants, scheduled flows, or point automations. They work when conditions are predictable.

Level 1: Agent Assist

The agent retrieves data, surfaces suggestions, or drafts content. Humans still drive every decision.

Level 2: Agent in Training

The agent executes narrow tasks end to end. Manual work decreases, but tight boundaries and clean data remain essential.

Level 3: Trusted Operator

The agent manages multistep workflows with shared context. Teams begin focusing on observability, logging, and exception handling.

Level 4: Collaborative Autonomy

Multiple agents coordinate across systems with light oversight. More work moves without manual triggers. Strong monitoring and governance are required.

Across all levels, the pattern is consistent: as the agent takes on more responsibility, the organization sees greater operational relief.

Responsibility as the Driver of ROI

Revenue leaders may also be asking how the cost of Agentforce compares to hiring another analyst or admin. It is a valid question, but it does not capture how workload shifts across maturity levels. At Levels 1 and 2, teams still own most of the operational load. At Levels 3 and 4, teams move from executing work to reviewing exceptions and updating guardrails. While this may not result in confidently replacing humans with AI for the organization today, it is valid to start redefining what roles will look like in terms of responsibility.

The reality is, human oversight isn’t going anywhere. What’s changing is the scope of what that oversight looks like. Some organizations manage this in-house; others lean on partners. Either way, what stays constant is the need for a structured approach to monitoring; things like data quality, agent behaviour, and edge-case handling across different environments still require thoughtful evaluation.

A simple principle holds based on maturity: AI creates value when it owns part of a workflow, not when it only assists it.

How Agents Learn in Real Systems

Teams that can gain traction with Agentforce will be able to treat it like any other managed component in their tech stack. They define the workflow, set constraints, observe behaviour, and refine as the agent learns.

Some patterns emerge consistently. Agents improve when object relationships and field definitions stay stable. Learning is faster when the agent can see both expected and unexpected outcomes and confidence grows when teams can surface logs, exceptions, and decision paths. Here are some examples:

Example 1: An agent may start by drafting follow-up emails based on opportunity stage and account history. After a few review cycles, it may update supporting fields once a rep approves the message. Over time, it can own the full loop for repeatable scenarios.

Example 2: An agent that assists with lead scoring may begin with suggestions. As teams review deviations, the agent adjusts its reasoning. With enough cycles, routing becomes faster and more consistent with fewer overrides.

In both cases, the agent grows because the workflow is structured and reviewed, not because the system is complex.

The Cost of Staying at Lower Maturity

It might feel safe to stay at the “helper bot” (Level 0) or “information‑retrieval agent” level (Level 1), but that comfort comes at a cost. When you stay in early maturity stages, you’ll often see signs of friction creeping in. Even as more automation is layered on, you may hit a plateau in cycle‑times, user confidence may erode as outputs get second‑guessed, and your workflows can grow brittle under evolving GTM motions. What do we recommend? Go back to the planning stages and mapping scalable use cases. According to recent frameworks for agentic AI maturity, the real leap in value comes when agents go from retrieving data to reasoning and acting; shifting from narrow, rule‑based tasks into coordinated workflows and autonomous orchestration.

Of course, alongside that value comes increased complexity and risk, but as the old saying goes: no risk, no reward. If you’re truly aiming to unlock ROI from agentic architecture, you’ll need to move beyond the safe zones and invest in the scale, data readiness, and governance layers that make autonomous agents practical. Waiting too long to plan for this (even if your comfort levels may currently sit with low level implementation) only makes that leap harder later on.

Questions That Signal Readiness

When organizations consider expanding agent responsibility, leaders often ask the following questions:

- Is our data steady enough for the agent to reason instead of following strict rules?

- Which operational decisions are stable enough to delegate?

- Do we have a clear way to review logs, exceptions, and deviations?

- Are we keeping manual steps because of real risk or habit?

- Is maintaining our current maturity level creating more work than value?

Reaching this stage usually brings up more questions than expected. Teams want to move faster, but they also want confidence in how the agent will be designed, governed, and refined over time. We have supported organizations facing these same patterns, whether it is uneven data, workflows that need restructuring, or uncertainty around how much responsibility to delegate. If you are encountering similar signals, we can help you evaluate readiness and shape a path that fits the way your systems operate today.

A Path Toward Collaborative Autonomy

Agentforce is not a jump from simple automation to full autonomy. It is a progression built on clear workflows, reliable monitoring, and steady refinement. When the environment supports learning and the team understands how responsibility expands, the model becomes predictable and sustainable.

So the question becomes: Imagine the time and energy your team could save if these high-volume processes started to run on their own. Curious what that could look like? Let’s chat.